Introduction:

In an exploration that goes beyond the conventional, we delve into the fascinating realm of deep learning—a distinctive facet of machine learning and artificial intelligence. Discover the intricacies of this powerful approach as it revolutionizes the way we acquire knowledge. Prepare to embark on a journey through the complexities of deep learning, where algorithms unravel patterns and make predictions in an unprecedented manner.

Understanding the Essence of Deep Learning:

At its core, deep learning serves as an automated means of predictive analytics, diverging from traditional linear machine learning algorithms. By constructing a hierarchical structure of increasing complexity and abstraction, deep learning algorithms imitate the way humans gain knowledge, enabling them to process vast amounts of data more efficiently.

Unveiling the Inner Workings:

Deep learning programs follow a similar path to a young child learning to recognize objects. Each layer of the algorithm applies nonlinear transformations to the input and uses the acquired knowledge to create a statistical model as output. The iterative process continues until the model achieves a desired level of accuracy. This “deep” characteristic stems from the multiple layers through which data passes, forming a progressively refined representation.

The Advantages of Deep Learning:

In contrast to traditional machine learning, where programmers must meticulously define features, deep learning autonomously constructs its own feature sets without supervision. This unsupervised learning significantly accelerates the process while improving accuracy. Initially, deep learning programs are presented with training data, containing labeled examples. They learn from this data to build a feature set and create a predictive model. Through iterations, the model becomes increasingly sophisticated, adapting to patterns and refining its predictions.

Empowering Progress with Data and Processing Power:

Deep learning programs demand substantial amounts of training data and processing power, which have become more accessible with the rise of big data and cloud computing. By generating complex statistical models directly from iterative outputs, deep learning excels at analyzing vast quantities of unlabeled and unstructured data—a crucial capability in the era of the expanding Internet of Things (IoT).

Methods and Techniques:

Several techniques enhance the effectiveness of deep learning models. Learning rate decay, for instance, adjusts the rate at which the model changes based on estimated error. Transfer learning refines preexisting models for new tasks, reducing computation time. Training from scratch involves building a network architecture from a large labeled dataset, ideal for new applications. Dropout addresses overfitting by randomly removing units from the neural network during training, improving performance on various learning tasks.

The Spectrum of Deep Learning Applications:

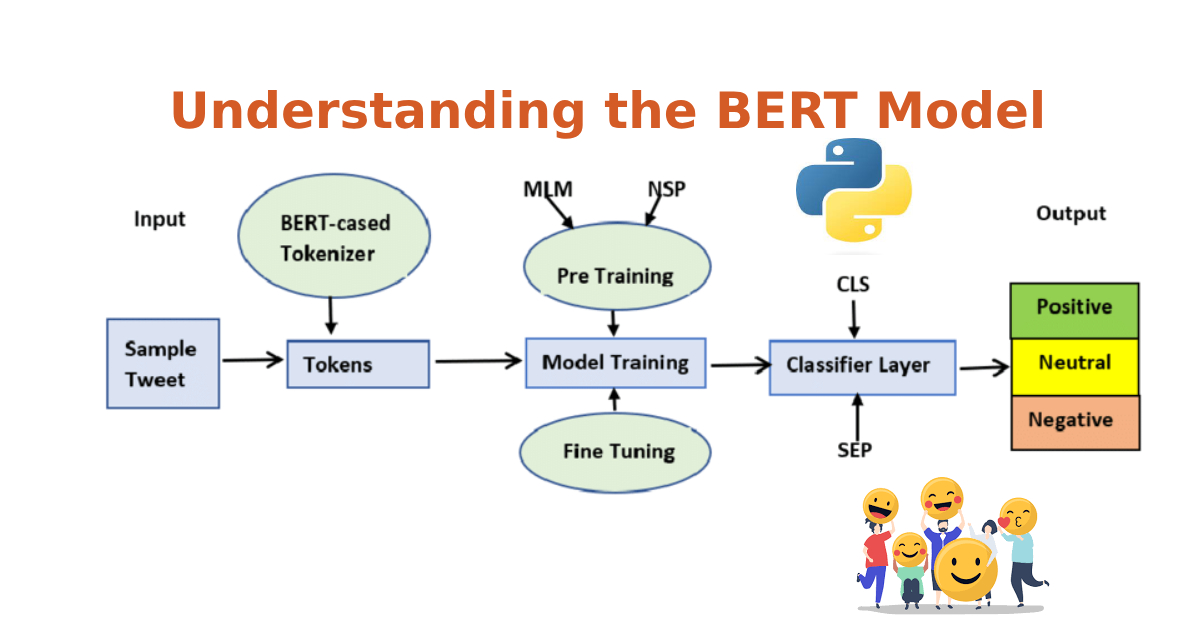

Thanks to their similarity to human brain processes, deep learning models find application in diverse fields. Image recognition, natural language processing (NLP), speech recognition, and self-driving cars are just a few examples of areas benefiting from deep learning’s capabilities. Its uses span industries such as customer experience enhancement, text generation, aerospace and military operations, industrial automation, adding color to media, medical research, and computer vision.

Limitations and Challenges:

While powerful, deep learning models possess limitations. They rely solely on observed data, making them susceptible to biases present in training datasets. Biases perpetuated by models can pose ethical challenges. The learning rate can also impact model convergence, requiring careful calibration. Hardware requirements, such as high-performing GPUs, can create constraints due to cost and energy consumption. Additionally, deep learning requires extensive amounts of data, and once trained, models lack flexibility for multitasking or reasoning tasks.

Deep Learning vs. Machine Learning:

Deep learning is a subset of machine learning that distinguishes itself by its problem-solving approach. Deep learning algorithms do not require explicit feature identification by domain experts, unlike traditional machine learning. While deep learning algorithms take longer to train, they exhibit faster testing times. Machine learning is preferred when interpretability and smaller datasets are crucial factors, while deep learning shines in scenarios with abundant data, complex problems, and limited domain understanding, such as speech recognition and NLP.

Conclusion: As we emerge from the depths of understanding, deep learning unveils its transformative power in the realm of artificial intelligence. Armed with vast amounts of data and ever-increasing processing capabilities, deep learning models are reshaping various industries and revolutionizing the way we solve complex problems. While challenges remain, the potential for further advancements in this field is boundless, promising a future where machines acquire knowledge in unprecedented ways.