Language models have revolutionized natural language processing (NLP) by providing state-of-the-art performance on a wide range of language-related tasks. Large Language Models (LLMs) like GPT-3 have gained significant attention due to their ability to understand and generate human-like text. In this article, we’ll explore how to leverage LLMs in Python, including their implementation and applications.

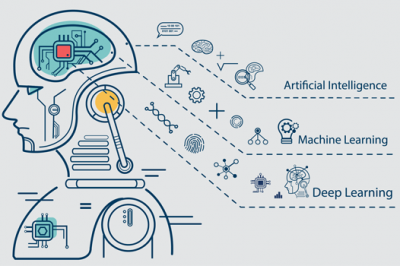

What Are LLMs?

Large Language Models (LLMs) are deep learning models, often based on transformer architectures, that have been pre-trained on vast amounts of text data. These models can generate coherent and contextually relevant text, answer questions, translate languages, and perform various other NLP tasks. The key characteristics of LLMs include:

- Pre-training: LLMs are trained on a massive corpus of text from the internet. During pre-training, they learn language patterns, grammar, context, and even some world knowledge.

- Fine-tuning: After pre-training, LLMs can be fine-tuned on specific tasks or datasets to adapt them for particular applications like chatbots, text generation, or sentiment analysis.

Implementation of LLMs in Python

To implement LLMs in Python, you’ll need to use a deep learning framework like PyTorch or TensorFlow, along with libraries specifically designed for working with LLMs like the Hugging Face Transformers library. Here’s a step-by-step guide on how to get started:

Step 1: Install Dependencies

First, install the necessary Python libraries:

pip install transformers torchStep 2: Load a Pre-trained Model

You can choose from various pre-trained LLMs, such as GPT-2, GPT-3, BERT, and more, depending on your application. Here, we’ll use GPT-2 as an example:

from transformers import GPT2LMHeadModel, GPT2Tokenizer

# Load the pre-trained model and tokenizer

model_name = "gpt2"

model = GPT2LMHeadModel.from_pretrained(model_name)

tokenizer = GPT2Tokenizer.from_pretrained(model_name)Step 3: Generate Text

Once you have the model and tokenizer, you can generate text by providing a prompt:

# Define a text prompt

prompt = "Once upon a time, in a land far, far away"

# Tokenize the prompt

input_ids = tokenizer.encode(prompt, return_tensors="pt")

# Generate text

output = model.generate(input_ids, max_length=100, num_return_sequences=1)

# Decode the generated text

generated_text = tokenizer.decode(output[0], skip_special_tokens=True)

# Print the generated text

print(generated_text)This code will generate a continuation of the given prompt using the pre-trained GPT-2 model.

Applications of LLMs

LLMs have a wide range of applications across various domains. Here are some notable examples:

1. Text Generation

LLMs can generate human-like text for content creation, creative writing, and storytelling. They can also be used to complete sentences or paragraphs based on a given input.

2. Chatbots and Virtual Assistants

LLMs serve as the backbone for chatbots and virtual assistants. They can understand and generate natural language responses, making them useful for customer support, virtual companions, and automated messaging systems.

3. Translation Services

LLMs can perform translation tasks by converting text from one language to another. Models like MarianMT can handle multilingual translation effectively.

4. Sentiment Analysis

By fine-tuning LLMs on sentiment analysis datasets, you can build models that analyze the sentiment of text, helping businesses understand customer feedback and public sentiment.

5. Content Summarization

LLMs can automatically summarize long documents or articles, making it easier for users to extract key information quickly.

6. Question-Answering Systems

LLMs can answer questions based on a given context, making them suitable for applications like virtual FAQs, educational tools, and information retrieval systems.

7. Loading Data from CSV into LLM

Loading data from a CSV file into a Large Language Model (LLM) typically involves tokenizing the text data from the CSV and then feeding it into the LLM for processing. In this example, we’ll use Python along with the Hugging Face Transformers library to load data from a CSV file and pass it through a pre-trained LLM for text generation

Suppose you have a CSV file named input_data.csv with a column called “text” that contains the text data you want to pass to the LLM.

import pandas as pd

from transformers import GPT2LMHeadModel, GPT2Tokenizer

# Load the CSV data into a Pandas DataFrame

data = pd.read_csv("input_data.csv")

# Initialize the pre-trained model and tokenizer

model_name = "gpt2"

model = GPT2LMHeadModel.from_pretrained(model_name)

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

# Loop through each row in the DataFrame

for index, row in data.iterrows():

# Extract the text from the "text" column

text = row["text"]

# Tokenize the text

input_ids = tokenizer.encode(text, return_tensors="pt", max_length=512, truncation=True)

# Generate text based on the input

generated_output = model.generate(input_ids, max_length=100, num_return_sequences=1)

# Decode the generated text

generated_text = tokenizer.decode(generated_output[0], skip_special_tokens=True)

# Print the generated text

print(f"Original Text: {text}")

print(f"Generated Text: {generated_text}")

print("=" * 50)

Reading data from Elasticsearch to use with a Large Language Model (LLM) in Python involves querying the Elasticsearch index, retrieving the text data, and then passing it to the LLM for processing. You’ll need the elasticsearch-py library for interacting with Elasticsearch and the transformers library for using the LLM. Ensure you have both libraries installed (you can install them with pip install elasticsearch elasticsearch-dsl transformers).

Here’s a step-by-step example:

Suppose you have an Elasticsearch index named “documents” with a field called “content” that contains the text data you want to use.

from elasticsearch import Elasticsearch

from transformers import GPT2LMHeadModel, GPT2Tokenizer

# Initialize the Elasticsearch client

es = Elasticsearch()

# Initialize the pre-trained model and tokenizer

model_name = "gpt2"

model = GPT2LMHeadModel.from_pretrained(model_name)

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

# Elasticsearch query to retrieve documents

query = {

"query": {

"match_all": {} # You can modify this query as needed

},

"size": 10 # Number of documents to retrieve

}

# Perform the Elasticsearch search

results = es.search(index="documents", body=query)

# Process and generate text for each document

for hit in results["hits"]["hits"]:

# Extract the text from the Elasticsearch document

text = hit["_source"]["content"]

# Tokenize the text

input_ids = tokenizer.encode(text, return_tensors="pt", max_length=512, truncation=True)

# Generate text based on the input

generated_output = model.generate(input_ids, max_length=100, num_return_sequences=1)

# Decode the generated text

generated_text = tokenizer.decode(generated_output[0], skip_special_tokens=True)

# Print the generated text

print(f"Original Text: {text}")

print(f"Generated Text: {generated_text}")

print("=" * 50)