Introduction

Large Language Models (LLMs) have emerged as cornerstones of modern Natural Language Processing (NLP). From early statistical models to the sophisticated deep learning architectures we have today, the evolution of LLMs has been nothing short of revolutionary. In this article, we explore the historical trajectory of LLMs, the state of current research, and the promising areas of development.

History of LLMs

Early Beginnings: Statistical Models

Before the advent of neural networks in NLP, statistical methods dominated the scene. These models relied heavily on techniques like Bayesian inference and Hidden Markov Models (HMM). They worked by analyzing vast amounts of text and making probabilistic predictions about words or phrases that would likely follow a given sequence.

Neural Networks: The Shift Begins

The rise of Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks marked the beginning of neural NLP. These models could capture sequential dependencies in text, addressing many limitations of statistical methods.

The Transformers Revolution

Introduced in the paper “Attention is All You Need” by Vaswani et al. in 2017, the transformer architecture changed the landscape. With its unique self-attention mechanism, transformers could capture long-range dependencies in text, bypassing the need for sequential processing and enabling parallelization. This innovation laid the foundation for large-scale models we see today.

Current Works: Dominance of Transformer-based LLMs

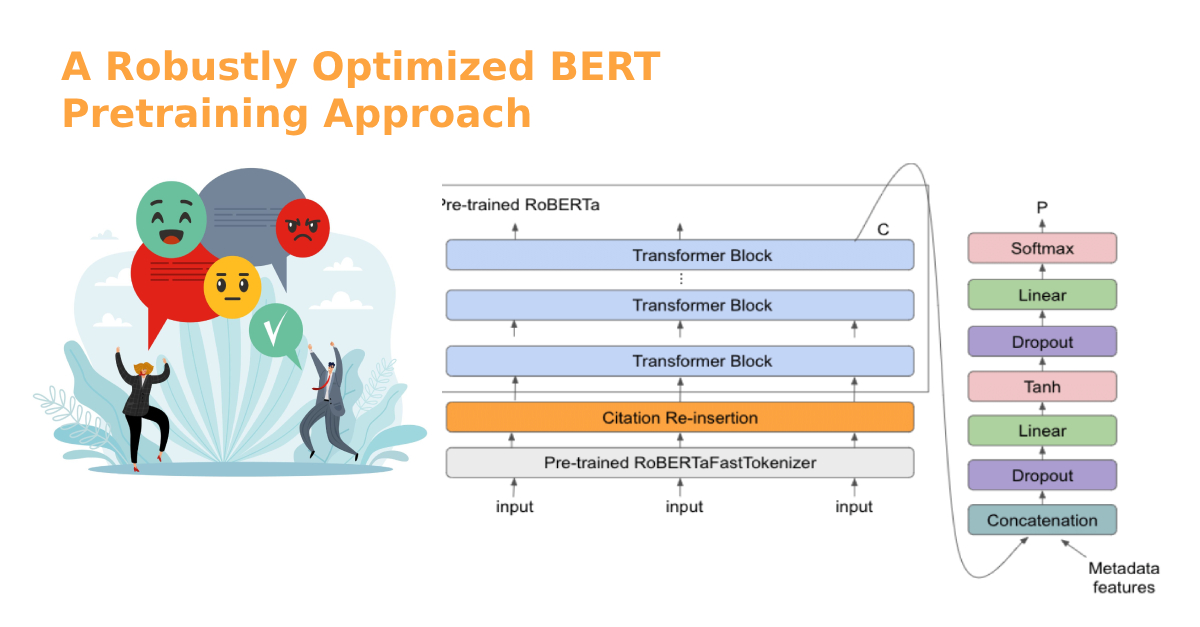

BERT and Its Progeny

BERT (Bidirectional Encoder Representations from Transformers), introduced by Google in 2018, marked a paradigm shift. Instead of predicting the next word in a sequence, BERT was trained to predict missing words from sentences, making it bidirectional. Its pre-training and fine-tuning approach became a blueprint for subsequent models. Variants like RoBERTa, DistilBERT, and others soon followed, each improving or adapting BERT’s original methodology.

GPT and the Promise of Generative Models

OpenAI’s GPT (Generative Pretrained Transformer) series, especially GPT-3, gained significant attention for its generative capabilities. Unlike BERT, which is primarily a classification model, GPT can generate coherent and contextually relevant text over extended passages. With 175 billion parameters, GPT-3 showcased the power and potential of LLMs.

Multilingual and Domain-Specific Models

Recognizing the need for non-English language processing and domain-specific knowledge, models like mBERT (Multilingual BERT) and BioBERT (specialized for biomedical texts) emerged. These models are pushing the boundaries of NLP’s applicability across languages and specialized fields.

Research Areas: The Future of LLMs

Model Efficiency and Distillation

As models grow, their computational requirements become prohibitive. Research into making these models more efficient, through techniques like knowledge distillation, where large models teach smaller models, is gaining traction.

Ethical Considerations and Bias Mitigation

LLMs have come under scrutiny for perpetuating biases present in their training data. Addressing these biases, ensuring fairness, transparency, and accountability, is a significant research focus.

Improving Generalization and Few-shot Learning

GPT-3 demonstrated few-shot learning, where it performs tasks with minimal examples. However, there’s a lot of scope in making models generalize better across diverse tasks with limited data.

Combining Symbolic and Sub-symbolic Reasoning

While LLMs excel at pattern recognition, they sometimes falter at tasks requiring logical reasoning. Integrating symbolic reasoning (rule-based logic) with the sub-symbolic reasoning (neural networks) of LLMs is an emerging area of interest.

Exploring Non-textual Modalities

Most LLMs focus on text, but there’s growing interest in models that can handle multiple modalities, like images and sound. CLIP, DALL·E by OpenAI, and ViLBERT are leading this multi-modal revolution.

Lifelong and Continual Learning

Traditional LLMs suffer from “catastrophic forgetting” when trained on new tasks. Research into models that can learn continually, without forgetting previous knowledge, is a promising frontier.

GPT: The Progenitor

GPT, the first in the series, was introduced in June 2018. While the transformer architecture itself was not new, the training methodology was innovative.

- Model Size: GPT had 117M parameters.

- Training Approach: It employed a two-step process: unsupervised pre-training and supervised fine-tuning. The model was initially trained on a massive corpus (BooksCorpus) to predict the next word in a sequence. Post pre-training, it was fine-tuned on specific tasks using labeled data.

- Achievements: GPT achieved state-of-the-art performance on multiple NLP benchmarks, including translation, question-answering, and summarization, showcasing the flexibility of the transformer architecture.

GPT-2: Scaling Up

Introduced in February 2019, GPT-2 took the foundational ideas of GPT and super-sized them.

- Model Size: GPT-2 came in various sizes, with the largest boasting a staggering 1.5B parameters.

- Training Data: WebText, a much more extensive dataset than BooksCorpus, was used. It contained data scraped from the web, providing a richer linguistic environment for the model.

- Achievements: GPT-2’s generative capabilities were uncanny. It could craft coherent, contextually relevant paragraphs and was even used to write essays and poetry. However, its ability to generate misleading information or fake news raised concerns, leading OpenAI to initially withhold the release of the largest model.

- Release Controversy: Due to concerns about potential misuse, OpenAI staggered the release of GPT-2, starting with smaller versions and eventually releasing the full 1.5B parameter model after extensive safety evaluations.

GPT-3: The Colossus

GPT-3, released in June 2020, was not just an incremental improvement—it was a quantum leap.

- Model Size: With a whopping 175 billion parameters, it dwarfed its predecessor, making it the largest LLM at its time of release.

- Capabilities: GPT-3 showcased impressive few-shot learning. Given a handful of examples, it could perform a new task, eliminating the need for extensive fine-tuning in many cases.

- Applications: The model’s versatility led to a myriad of applications, from drafting emails, writing code, answering questions, creating artistic content, to tutoring in various subjects.

- API & Commercial Impact: OpenAI released a commercial API for GPT-3, marking its transition from a research entity to a commercial player. The API empowered developers worldwide to integrate GPT-3 into applications, products, and services.

The Road Ahead: Beyond GPT-3

While GPT-3 remains the latest major release in the GPT series at the time of this writing, the rapid evolution of the field suggests that even larger and more capable models might be on the horizon. As LLMs continue to grow, researchers and practitioners are increasingly focusing on challenges related to their efficiency, ethical implications, and potential biases.

Conclusion

From the early days of statistical models to the transformative might of transformer architectures, the evolution of Large Language Models has been a testament to the relentless pace of innovation in the NLP domain. This journey has been further magnified by the GPT series, which stands as a monumental advancement, showcasing capabilities from foundational architectures to the nearly magical prowess of GPT-3. Both the broader LLM landscape and the GPT lineage highlight a shared vision: moving towards a future where models are not only more versatile, efficient, and ethical but also come ever closer to achieving a human-like understanding of language. This confluence sets the stage for the next frontier of AI-driven linguistic endeavors, propelling us towards the long-cherished dream of machines truly understanding human language.