Introduction

In the rapidly evolving world of data engineering, serverless architectures have emerged as a game-changing technology. These architectures, where the management of servers is outsourced to cloud providers, allow data engineers to focus on building scalable and efficient data processing pipelines without the hassle of server management. This article delves into the role of serverless architectures in data engineering, highlighting their benefits and illustrating their application with coding examples.

What is Serverless Architecture?

Serverless architecture refers to a cloud computing model where the cloud provider dynamically manages the allocation of machine resources. Unlike traditional architectures where servers must be provisioned and managed, serverless architectures abstract these details away, allowing developers to focus purely on the code.

Benefits in Data Engineering

- Scalability: Automatically scales with the workload, perfect for handling variable data loads.

- Cost-Effectiveness: Pay only for the compute time you use, reducing costs significantly.

- Reduced Overhead: Eliminates the need for managing servers and infrastructure.

- Faster Time-to-Market: Simplifies deployment process, enabling quicker delivery of data solutions.

Serverless in Action: AWS Lambda Example

AWS Lambda is a popular serverless computing service. Let’s explore how it can be used for a simple data processing task.

Example: A Lambda function to process data from an S3 bucket and store the transformed data back into S3.

Requirements:

- AWS account

- Basic understanding of AWS services (Lambda, S3)

- Knowledge of Python

Step 1: Setting up the Trigger

First, we set up an S3 bucket that triggers a Lambda function upon the arrival of new data.

import boto3

import os

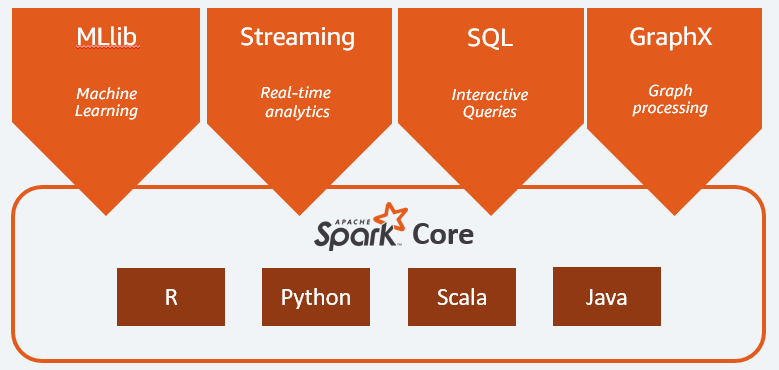

from pyspark.sql import SparkSession

from urllib.parse import unquote_plus

# Initialize Spark session

spark = SparkSession.builder.appName("pyspark-lambda").getOrCreate()

def lambda_handler(event, context):

for record in event['Records']:

bucket = record['s3']['bucket']['name']

key = unquote_plus(record['s3']['object']['key'])

input_path = f"s3://{bucket}/{key}"

output_path = f"s3://{bucket}/output/"

# Read the CSV file from S3

df = spark.read.csv(input_path, header=True, inferSchema=True)

# Perform transformations

transformed_df = transform_data(df)

# Write the transformed data back to S3

transformed_df.write.csv(output_path, mode="overwrite", header=True)

def transform_data(df):

# Example transformation: Rename a column

df = df.withColumnRenamed("old_column_name", "new_column_name")

# More transformations can be added here

# ...

return df

Step 2: Deploying the Lambda Function

This code can be deployed as a Lambda function through the AWS Management Console. Once deployed, the function will automatically be triggered every time a new file is uploaded to the specified S3 bucket.

Challenges and Best Practices

- State Management: Serverless functions are stateless. Use external storage like Amazon DynamoDB for maintaining state.

- Timeouts and Limits: Be mindful of the execution time limits and plan your data processing tasks accordingly.

- Error Handling: Implement robust error handling to manage failed executions or data processing errors.

Conclusion

Serverless architectures offer a flexible, efficient, and cost-effective solution for data engineering tasks. By leveraging services like AWS Lambda, data engineers can build scalable data processing pipelines that respond dynamically to changing workloads. While there are challenges to consider, the advantages of serverless computing in the context of data engineering are substantial, making it an essential tool in the modern data engineer’s toolkit.