In this guide, you’ll explore the distinctions between ThreadPoolExecutor and ProcessPoolExecutor and gain insights into when to employ them in your Python endeavors. Now, let’s begin.

What exactly does ThreadPoolExecutor entail?

ThreadPoolExecutor is a Python class that offers a thread pool mechanism.

A thread represents a unit of execution.

Threads are associated with processes and have the capability to share memory, encompassing both state and data, among other threads within the same process. In Python, as in numerous contemporary programming languages, threads are generated and controlled by the underlying operating system, typically referred to as system threads or native threads.

To establish a thread pool, you can create an instance of this class and designate the desired number of threads using the max_workers parameter. For instance:

from concurrent.futures import ThreadPoolExecutor

executor = ThreadPoolExecutor(max_workers=10)

You can leverage the thread pool’s power by sending tasks for execution using the map() and submit() functions.

The map() function works similarly to Python’s built-in map() function. It accepts a function and an iterable of items. The specified function is then applied to each item in the iterable as individual tasks within the thread pool. If the function yields results, you’ll get an iterable of those results.

It’s important to note that the call to map() doesn’t block the program, but the results you obtain from the returned iterator will block until their corresponding tasks are finished.

Here’s an illustrative example:

# call a function on each item in a list and process results

for result in executor.map(task, items):

# process result...You can send tasks to the pool using the submit() function. It requires the target function and any arguments, returning a Future object.

This Future object allows you to check the task’s status (e.g., done(), running(), or cancelled()) and retrieve its result or raised exception once it’s completed. Just be aware that result() and exception() calls will block until the associated task is finished.

# submit a task to the pool and get a future immediately

future = executor.submit(task, item)

# get the result once the task is done

result = future.result()When you’re done with the thread pool, you can shut it down using the shutdown() function to release worker threads and resources.

Example:

# shutdown the thread pool

executor.shutdown()You can simplify creating and shutting down the thread pool using a context manager, which automatically handles the shutdown() function.

Example:

# create a thread pool

with ThreadPoolExecutor(max_workers=10) as executor:

# call a function on each item in a list and process results

for result in executor.map(task, items):

# process result...

# ...

# shutdown is called automaticallyProcessPoolExecutor

ProcessPoolExecutor offers a Python process pool.

A process is a program instance with a main thread, possible additional threads, and the ability to spawn child processes. Python, like many modern languages, relies on the operating system to create and manage processes.

To create a process pool, simply instantiate the class and specify the number of processes using the max_workers argument. For instance,

from concurrent.futures import ProcessPoolExecutor

# Create a process pool with 10 workers

executor = ProcessPoolExecutor(max_workers=10)

You can send tasks to the process pool using the map() and submit() functions.

map() works like Python’s built-in map(). It takes a function and an iterable, executing the function for each item as separate tasks in the process pool. You’ll get an iterable of results if the function returns values.

Note, though, that map() doesn’t block the program, but results from the iterator will block until their tasks are done.

# call a function on each item in a list and process results

for result in executor.map(task, items):

# process result...You can send tasks using submit(), which takes a target function and arguments, returning a Future object.

This Future can query task status (e.g., done(), running(), or cancelled()) and retrieve results or exceptions when ready. Just remember that result() and exception() calls block until the task finishes.

# submit a task to the pool and get a future immediately

future = executor.submit(task, item)

# get the result once the task is done

result = future.result()When done with the process pool, call shutdown() to release worker processes and their resources.

# shutdown the process pool

executor.shutdown()Simplify creating and closing the process pool using a context manager, which auto-triggers the shutdown() function.

Example:

# create a process pool

with ProcessPoolExecutor(max_workers=10) as executor:

# call a function on each item in a list and process results

for result in executor.map(task, items):

# process result...

# ...

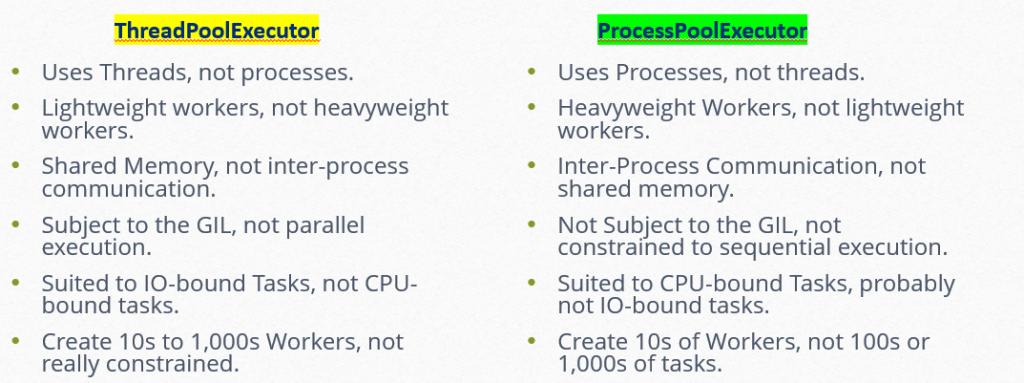

# shutdown is called automaticallyComparing ThreadPoolExecutor and ProcessPoolExecutor

Now that we have a good grasp of ThreadPoolExecutor and ProcessPoolExecutor, let’s explore their similarities.

Common Ancestry with Executor Both ThreadPoolExecutor and ProcessPoolExecutor inherit from the Executor class within concurrent.futures.

This abstract class establishes a unified interface for executing asynchronous tasks, encompassing key functions like:

- submit()

- map()

- shutdown()

Consequently, both classes share a parallel lifecycle in terms of pool creation, task execution, and termination.

Furthermore, they coexist within the concurrent.futures module namespace, forming the foundation for their shared traits.

Pool Management for Versatile Task Execution Both classes excel in managing pools of workers for executing diverse tasks.

They don’t impose restrictions on task characteristics, whether it’s about:

- Task duration: be it long or short tasks.

- Task types: whether tasks are uniform or varied.

In essence, both classes provide a flexible workforce for handling a wide range of tasks.

Consistent Usage of Futures Both classes return Future instances upon submitting tasks.

This uniformity ensures a consistent interface for monitoring task status and fetching results from ongoing tasks.

Moreover, it facilitates the utilization of shared utility module functions like wait() and as_completed() when orchestrating groups of tasks through their Future objects.

These commonalities simplify the transition between the two classes, allowing knowledge transfer between them and enabling code to be agnostic to the specific Executor type, seamlessly switching between threads and processes.